Improving Content Quality

The problem

This was one of my favourite projects at Culture Trip because I was able to tackle a complex problem from two different sides of the product – the user facing side and the backend through internal tooling.

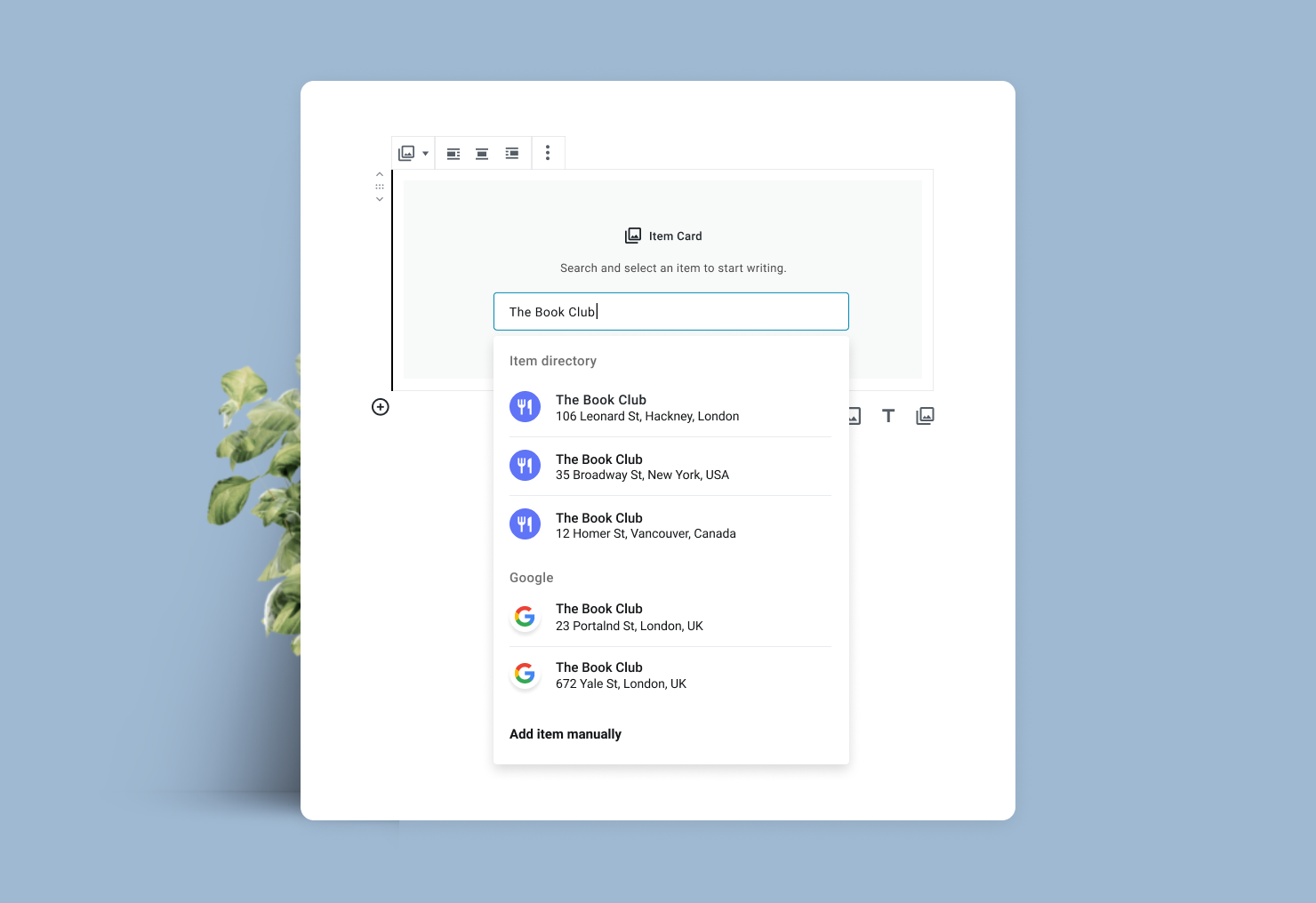

Culture Trip is a travel site where hundreds of writers around the world give local recommendations for restaurants or where to stay, inspiring 15-20 million unique visitors a month. But sometimes those writers can make mistakes when writing about these places, either entering in the wrong address or business hours. Or the article could be so old that some of the recommendations are permanently shut down.

Culture Trip is a travel site where 15-20 million unique visitors a month go to for recommendations on where to eat or what attractions to visit while on holiday.

As Culture Trip began scaling their content, so did these human errors and more content became outdated, leading hundreds of disappointed readers to the wrong places.

The goal was to optimise the way we build and display content so we can improve the quality in our content as we scale. I started at the root of the problem.

Solution 1 – Optimising the way we build articles

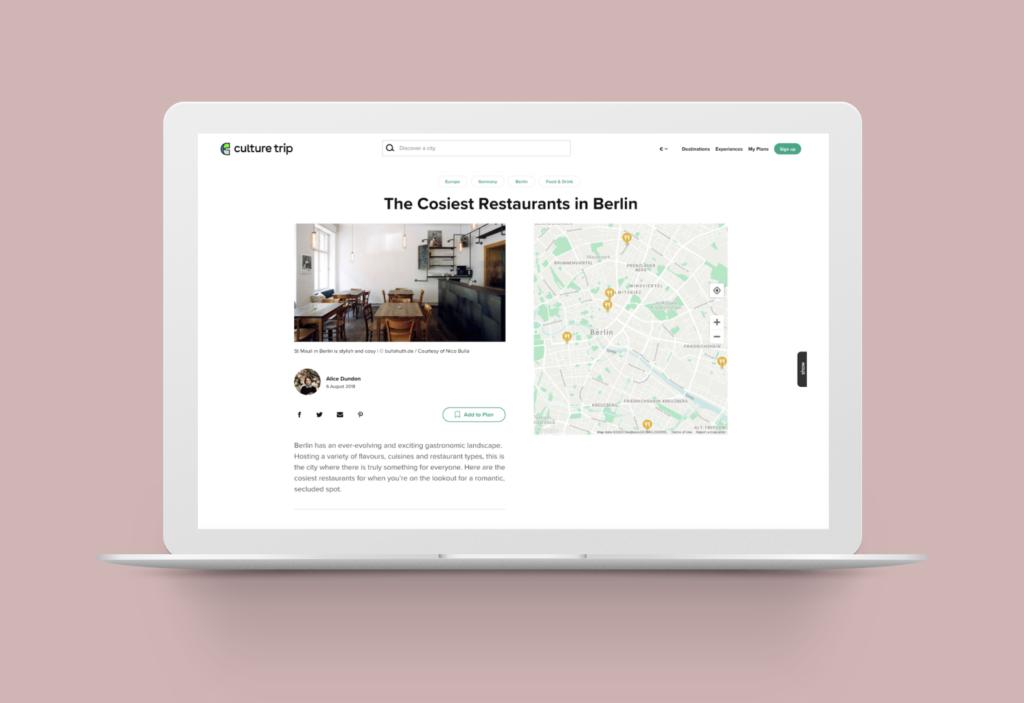

I started interviewing writers in London and New York and shadowed them building articles. With the Product Manager, I mapped out the flow to build an article and we found that the most time consuming part was creating ‘Items’. An item is a location (restaurant or attraction) the writer is recommending. To create an item, the writer has to manually enter the name, category, address, operating hours etc. On average we found it took writers 5 minutes to create a single item. This means that on average when a writer is creating an article, they can be spending 35 minutes alone just building these items, not including their writing time.

Building an article is a long, tedious and unintuitive process that resulted in writers trying to get through it as quick as possible, guessing a lot of the details and making errors along the way.

My first instinct was to cut down as many steps out of the flow as we could but after testing a prototype with writers, they said it didn’t feel any easier.

So I went back to the drawing board and worked with the engineers to find ways of cutting down these steps which is when an engineer suggested introducing a Google ID step earlier on in the flow. A Google ID is essentially a code that automatically pulls information for a location (like a restaurant or attraction).

By asking for the Google ID earlier in the flow, all of the information could be automatically populated, boiling down what was a 20 step process for our writer down to 3 steps.

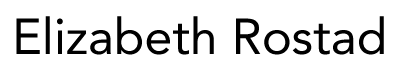

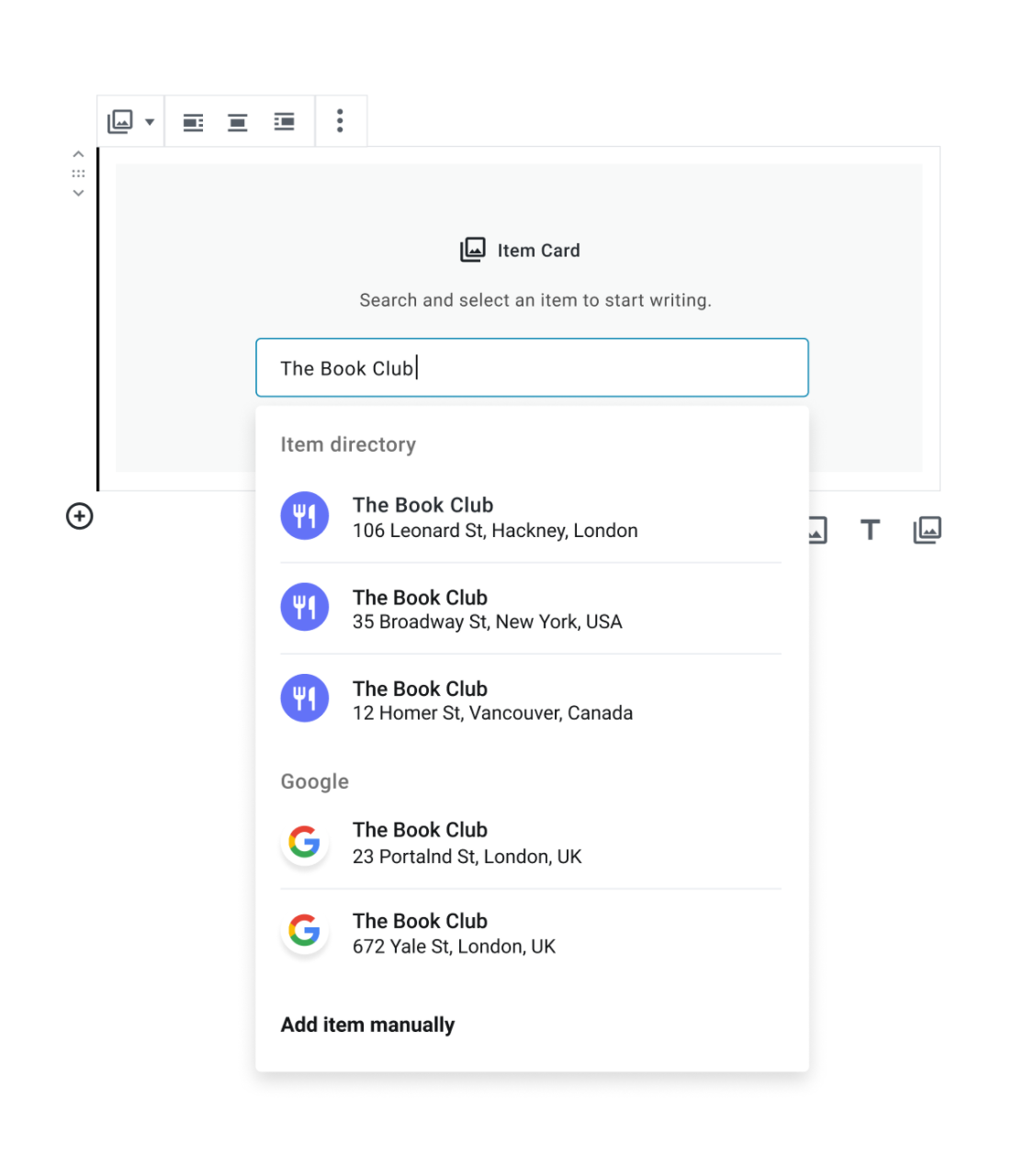

The challenge now was how to include the Google ID into this article creation process. With the engineers, we created a list of steps we wanted the writer to take to avoid duplications or errors.

First, we wanted writers to check to see if the place they’re writing about already exists in our database (avoiding further duplications). If it didn’t exist, we needed them to create it using a Google ID. For the remaining few locations that you can’t find on Google, they would have to then manually enter themselves.

We felt the best way to display these 3 options would be in a search tool displaying these 3 steps, encouraging the writer to follow our recommended steps by showing them in that exact order.

The new search tool, encouraging writers to follow our listed step when creating a location, minimising errors and duplications

The results

These optimisations helped take what was a 35 minutes process for each writer per article down to only 5 minutes. Increasing our writers efficiency when creating an article had an estimated savings of $84K in costs, while minimising any human errors along the way.

Problem 2 – Optimising our old content

The next problem to tackle was outdated content sitting on the site. We couldn’t simply delete these articles since we relied on that organic traffic and there was still decent content that was 5 years old. We needed to remove the old recommendations in these articles and our solution to flag these issues in the content was by crowdsourcing information.

If hundreds of our readers would go to the App Store to leave a review about a location we recommended was permanently closed, why not provide a feature where the users can communicate their frustration in a proactive way so we can action that feedback? The question was, would users use it and how would they use it.

Hundreds of our readers would go to the App Store to leave a review

Flagging issues in our content wasn’t something we could test using user research, so I built an MVP version to test the concept to see if it was worth investing in. We needed to know where readers would go to report it and what issues they’d report. So we added a button under each recommendation and one at the bottom of the article. This would then lead them to a survey monkey form I built.

What we learned from launching an MVP

After 2 weeks, we had over 150 responses and this is what we found:

- Users would report issues

- The issues aren’t what we assumed. We discovered 5 new issues in our content.

- Users were providing us recommendations themselves and even reviewing the content which helped validate these ideas already in our product roadmap.

Overall it was a worthwhile test to run. We were able to validate some of our assumptions and collect new insights that ultimately helped shape the final design. The biggest learning was knowing we’d have 3 different types of feedback coming in from users (issues, reviews and recommendations) which I was able to design the flow in a way that helped funnel this feedback to the appropriate teams to action them.

The results

Culture Trip is still accessing the impact of this final feature but in the first 2 months it’s helped flag 433 locations in our content that have now been updated or removed, which means 433 less angry App Store reviews and a 433 more happy readers.

Conclusion

Each solution was very different than the other – the front end using crowdsourced information to flag errors in our content, and the backend focusing on increasing our internal writer’s efficiency when building an article and removing any manual entry. In the end, we were able to improve the quality in our content from both sides of the product, with the added benefit of saving $84K per year along the way.

Category:

Culture Trip